Understanding H5ClusterRun and H5ClusterRunSummary

fmristore Package

2025-06-25

Source:vignettes/H5ClusterRun.Rmd

H5ClusterRun.Rmd

library(fmristore)

#>

#> Attaching package: 'fmristore'

#> The following object is masked from 'package:stats':

#>

#> offset

library(neuroim2)

#> Loading required package: Matrix

#>

#> Attaching package: 'neuroim2'

#> The following object is masked from 'package:base':

#>

#> scaleOverview

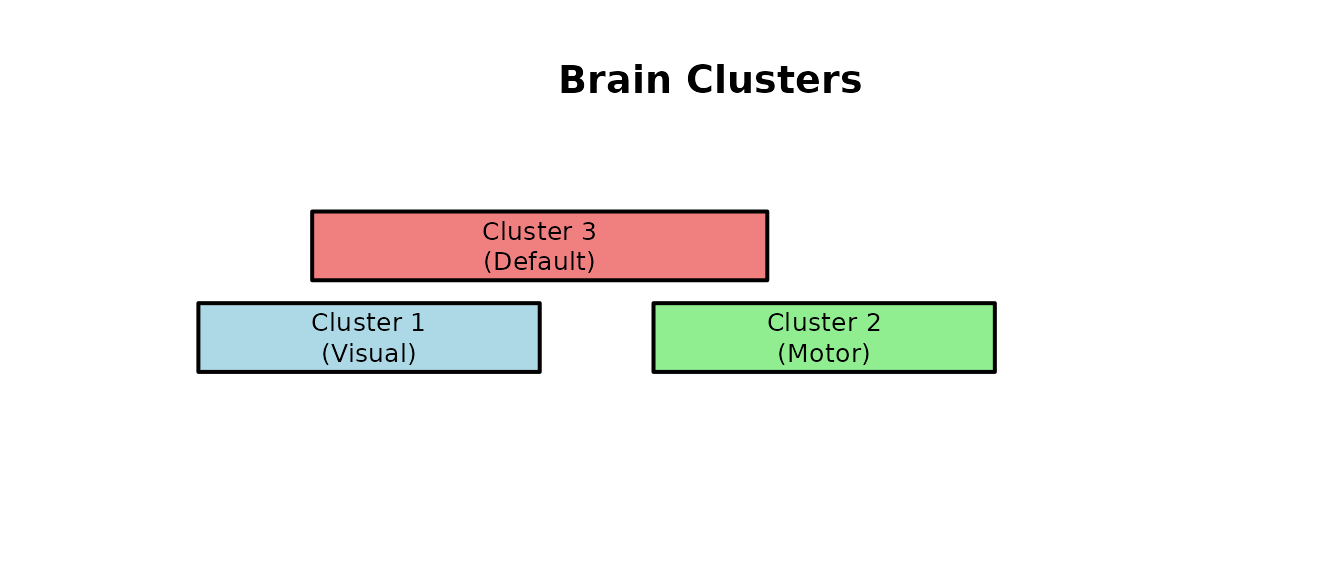

When working with clustered fMRI data, you have two storage options:

- H5ClusterRun: Stores complete time series for every voxel

- H5ClusterRunSummary: Stores only averaged time series per cluster

This vignette explains when to use each and how they work.

H5ClusterRun: Full Voxel Data

When to Use

Use H5ClusterRun when you need: - Voxel-level precision

- Spatial patterns within clusters - Individual voxel time series

Example: Creating Full Data

# Setup: Small brain with 3 clusters

brain_dims <- c(10, 10, 3)

mask_array <- array(TRUE, brain_dims)

mask_array[1:2, 1:2, ] <- FALSE # Some non-brain voxels

mask <- LogicalNeuroVol(mask_array, NeuroSpace(brain_dims))

n_voxels <- sum(mask)

# Create 3 clusters

cluster_assignments <- rep(1:3, length.out = n_voxels)

clusters <- ClusteredNeuroVol(mask, cluster_assignments)

print(table(clusters@clusters))

#>

#> 1 2 3

#> 96 96 96Generate Time Series Data

n_timepoints <- 50

# Create data for each cluster

cluster_data <- list()

# Cluster 1: Sine wave pattern

n_vox_c1 <- sum(clusters@clusters == 1)

base_signal_c1 <- sin(seq(0, 4 * pi, length.out = n_timepoints))

cluster_data[["cluster_1"]] <- matrix(

rep(base_signal_c1, each = n_vox_c1) + rnorm(n_vox_c1 * n_timepoints, sd = 0.2),

nrow = n_vox_c1,

ncol = n_timepoints

)

# Cluster 2: Square wave pattern

n_vox_c2 <- sum(clusters@clusters == 2)

base_signal_c2 <- rep(c(-1, 1), length.out = n_timepoints)

cluster_data[["cluster_2"]] <- matrix(

rep(base_signal_c2, each = n_vox_c2) + rnorm(n_vox_c2 * n_timepoints, sd = 0.2),

nrow = n_vox_c2,

ncol = n_timepoints

)

# Cluster 3: Random noise

n_vox_c3 <- sum(clusters@clusters == 3)

cluster_data[["cluster_3"]] <- matrix(

rnorm(n_vox_c3 * n_timepoints),

nrow = n_vox_c3,

ncol = n_timepoints

)Save and Access Full Data

# Prepare run data

full_run_data <- list(

scan_name = "task_run",

type = "full",

data = cluster_data,

metadata = list(TR = 2.0, task = "visual")

)

# Write to HDF5

h5_file <- tempfile(fileext = ".h5")

write_clustered_experiment_h5(

filepath = h5_file,

mask = mask,

clusters = clusters,

runs_data = list(full_run_data),

overwrite = TRUE,

verbose = FALSE

)

# Load and explore

experiment <- H5ClusterExperiment(h5_file)

#> Clusters argument is NULL, attempting to read from HDF5 file (/cluster_map).

full_run <- experiment@runs[["task_run"]]

print(full_run)

#>

#> H5ClusterRun

#> ----------------------------------------

#> Run Info

#> * Scan Name: task_run

#> * Time points: 50

#>

#> Shared Info (from H5ClusteredArray)

#> * Active voxels in mask: 288

#> * Number of clusters: 3

#>

#> Storage: HDF5 File

#> * Path: /tmp/RtmpKXQTtf/file21b06ac5cd5.h5

#> * Run clusters path: /scans/task_run/clusters (exists)

print(paste("Dimensions:", paste(dim(full_run), collapse = " x ")))

#> [1] "Dimensions: 10 x 10 x 3 x 50"Extract Voxel Time Series

# Get time series for specific voxels

voxel_indices <- c(1, 5, 10)

voxel_ts <- series(full_run, i = voxel_indices)

# Plot the time series

matplot(voxel_ts, type = "l", lty = 1,

main = "Individual Voxel Time Series",

xlab = "Time", ylab = "Signal")

legend("topright", legend = paste("Voxel", voxel_indices),

col = 1:3, lty = 1)

H5ClusterRunSummary: Averaged Data

When to Use

Use H5ClusterRunSummary when you need: - Regional

averages only - Reduced storage space - Faster processing -

Network-level analyses

Example: Creating Summary Data

# Create averaged time series per cluster

summary_data <- matrix(

c(base_signal_c1, # Cluster 1 average

base_signal_c2, # Cluster 2 average

rnorm(n_timepoints)), # Cluster 3 average

nrow = n_timepoints,

ncol = 3

)

# Add some noise

summary_data <- summary_data + rnorm(length(summary_data), sd = 0.1)

# Prepare summary run data

summary_run_data <- list(

scan_name = "rest_run",

type = "summary",

data = summary_data,

metadata = list(TR = 2.0, task = "rest")

)Save and Access Summary Data

# Write both full and summary to same file

write_clustered_experiment_h5(

filepath = h5_file,

mask = mask,

clusters = clusters,

runs_data = list(full_run_data, summary_run_data),

overwrite = TRUE,

verbose = FALSE

)

# Load and access summary run

experiment <- H5ClusterExperiment(h5_file)

#> Clusters argument is NULL, attempting to read from HDF5 file (/cluster_map).

summary_run <- experiment@runs[["rest_run"]]

print(summary_run)

#>

#> ── H5ClusterRunSummary ─────────────────────────────────────────────────────────

#>

#> ── Run Summary Info ──

#>

#> • Scan Name : rest_run

#> • Time points : 50

#> • Summary Dset : summary_data

#> Cluster Names (3):

#> ℹ Cluster1, Cluster2, Cluster3

#> Cluster IDs (3):

#> ℹ 1, 2, 3

#>

#> ── Shared Info (from H5ClusteredArray) ──

#>

#> • Active voxels in mask : 288

#> • Number of clusters (map) : 3

#>

#> ── Storage: HDF5 File ──

#>

#> • Path : /tmp/RtmpKXQTtf/file21b06ac5cd5.h5

#> • Status : Valid and Open

#> • Summary Dataset : /scans/rest_run/clusters_summary/summary_data (✔ exists)

#>

# Get the summary matrix

summary_matrix <- as.matrix(summary_run)

print(paste("Summary dimensions:", paste(dim(summary_matrix), collapse = " x ")))

#> [1] "Summary dimensions: 50 x 3"Visualize Summary Data

# Plot cluster averages

matplot(summary_matrix, type = "l", lty = 1, lwd = 2,

main = "Cluster Average Time Series",

xlab = "Time", ylab = "Average Signal",

col = c("blue", "green", "red"))

legend("topright", legend = paste("Cluster", 1:3),

col = c("blue", "green", "red"), lty = 1, lwd = 2)

Comparing Storage Efficiency

# Check dimensions

full_voxels <- sum(sapply(cluster_data, nrow))

full_elements <- full_voxels * n_timepoints

summary_elements <- prod(dim(summary_matrix))

cat("Full run storage:\n")

#> Full run storage:

cat(" Voxels:", full_voxels, "\n")

#> Voxels: 288

cat(" Total values:", full_elements, "\n\n")

#> Total values: 14400

cat("Summary run storage:\n")

#> Summary run storage:

cat(" Clusters:", ncol(summary_matrix), "\n")

#> Clusters: 3

cat(" Total values:", summary_elements, "\n\n")

#> Total values: 150

cat("Compression ratio:", round(full_elements / summary_elements, 1), ":1\n")

#> Compression ratio: 96 :1Practical Guidelines

Choose H5ClusterRunSummary when:

-

Connectivity analysis

# Example: Cluster-to-cluster correlation cluster_connectivity <- cor(summary_matrix) -

Group comparisons

Memory Considerations

# Estimate memory usage

full_memory_mb <- object.size(cluster_data) / 1024^2

summary_memory_mb <- object.size(summary_matrix) / 1024^2

cat("Memory usage:\n")

#> Memory usage:

cat(" Full run:", format(full_memory_mb, digits = 3), "MB\n")

#> Full run: 0.111 bytes MB

cat(" Summary run:", format(summary_memory_mb, digits = 3), "MB\n")

#> Summary run: 0.002 bytes MB

cat(" Savings:", format(full_memory_mb - summary_memory_mb, digits = 3), "MB\n")

#> Savings: 0.109 bytes MBKey Takeaways

- H5ClusterRun = Full resolution, larger storage

- H5ClusterRunSummary = Regional averages, compact storage

- Both can coexist in the same H5ClusterExperiment

- Choose based on your analysis needs

- Summary runs are perfect for connectivity and network analyses

- Full runs are essential for voxel-level statistics

Next Steps

- See

vignette("H5ClusterExperiment")for working with multiple runs - Explore connectivity analyses with summary data

- Learn about mixed designs (some runs full, some summary)